We are still finishing to clarify everything we have known in one of the most intense WWDC and most loaded with news that we have had in recent years.

But surely many of you realized that in the last 20 minutes of the presentation changed the tone completely. From a gala focused on user issues, we started talking about pure and simple development. In this part, something was presented that is a revolutionary first step towards the future of the development of native apps on Apple systems: SwiftUI.

We are going to explain it so that we understand its importance and the step it is for Apple. A step that will have an impact on the apps we use on a daily basis in the coming years.

Building a quantum internet with “artificial atoms”

It was a quiet night in ancient Greece …

Back in 1979, Steve Jobs, Jef Raskin and a few other successful Apple engineers attended the Xerox PARC, looking for an evolution that did not quite arrive in the world of microcomputing. The computers had begun to reach people’s homes, but were a text interface with a programming language, the BASIC at that time in the Apple II, did not help to popularize them. We had to go further.

On that trip they discovered the Xerox Alto, a computer with a portrait mode monitor that had a graphical user interface (something that nobody had ever seen) and a curious device that controlled an arrow called: mouse.

There is a plan to assign site domains with the blockchain

The engineers of Xerox, commanded by Alan Kay, had unknowingly built a discarded project and I do not understand the company, the future of computing. But they did not create only the computer, because they knew that making applications for a graphical user interface would be very complex, so they also devised their own development paradigm that would also revolutionize the world of programming: the MVC model ( model-view- controller ) applied to object orientation.

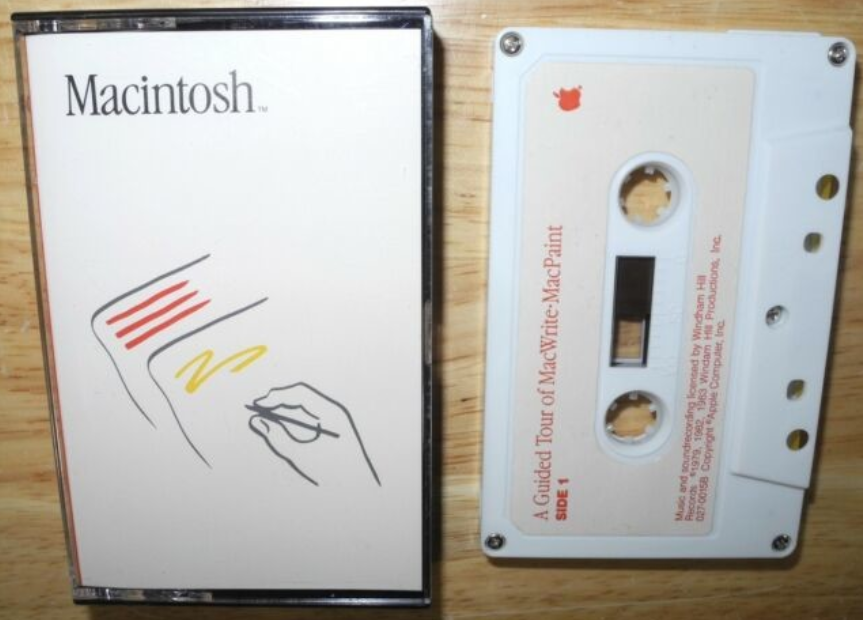

In 1983, Apple launched the Lisa and Xerox the Alto, but its high price (more than $ 18,000) sent both products to failure until in 1984 the Macintosh arrived. He did two things well: the first had a more affordable price (in comparison, they were $ 2,495). The second one that came with a series of discs with learning guides and some cassette tapes (yes, yes, tapes) that you put on your deck, you gave the PLAY and you heard how you were guided step by step on how it worked and handled the graphical user interface of the Macintosh and the different apps that came on disk.

Climate change could cost companies 1000 billion

Developing for that computer was nothing short of hell. The team of Steve Jobs was dedicated only to output the product, but not how to create software for that product. Making an application for a graphical interface was done by graphically programming each line of each button, field or element that was seen on the screen: you could not re-use code, there were no libraries (or frameworks ) and any element was hundreds or thousands of lines. Making softwaredevelopment easy was the next step that Jobs had taken after the launch of the Macintosh, but as we know, he was invited to leave the company he co-founded.

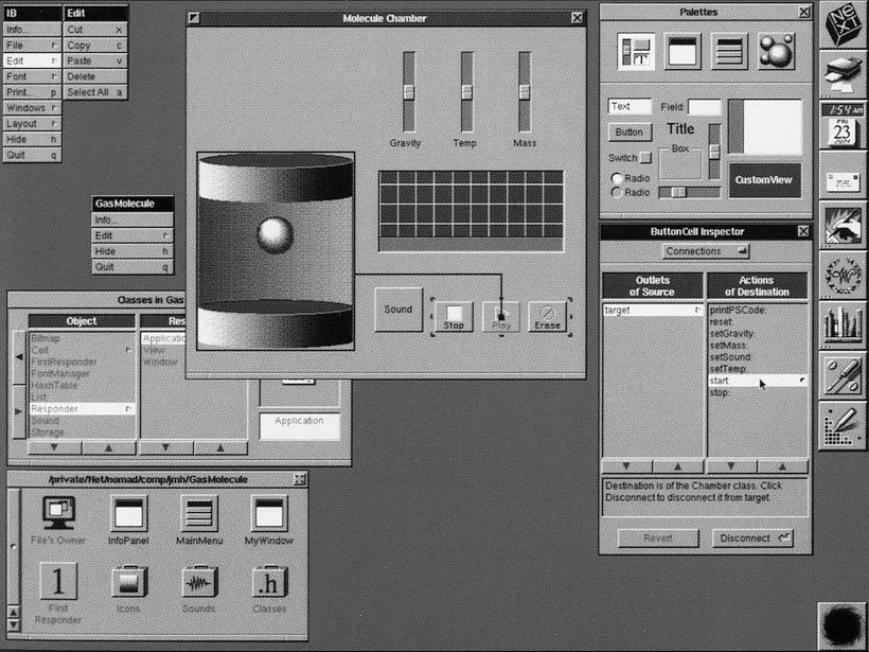

So he founded NeXT and there applied the other 50% of the great discovery he made in the Xerox PARC: developing the technology behind the creation of applications for graphical user interfaces of Alan Kay. The work of the entire NeXT team was launched in 1988 and was a revolution. With the new Interface Builder application and using the Objective-C language, you could pick up a canvas, drag and drop a button, a text field, a label, a check box … any element. Drag and drop. Then a connection was created between the code and the graphic element (an outlet ) and we could work with the object of that element.

Not only that: everything worked with two libraries that NeXT released with all the necessary code to build apps and use those components, as well as basic libraries for working with strings, dates or different types of data: the AppKit and FoundationKit libraries. That was the moment when development changed forever and it was again Steve Jobs who captained another disruptive change in the history of computing. Because the frameworks did not exist until then.

This technology was responsible for Steve Jobs returning to Apple in 1996, since the company he co-founded had run out of development architecture (based on Pascal) after Borland’s purchase of the main tool used to create apps for MacOS. And from there arose in 2001 OS X and in 2007 the library UIKit that allowed to give life to the first iPhone.

Since then nothing has changed in architecture: we can do more things, faster, better, even with a new language like Swift. But the architecture of development, the way we do the apps by dragging elements to a canvas and creating an outletthat connects it to the code is the same. The foundations have not changed in more than 30 years … until now.

FTP and more: Cyberduck 7 released

UIKit, imperative interfaces

The architecture that has been used so far, is based on what is now known as construction of imperative interfaces. A construction in which I create a function and associate it with a button (for example). I create an action. When someone touches the button, those lines on the button will be executed. Nothing simpler.

But an imperative interface has a problem that makes it less efficient: what we call the “state”. Basically those values that we have in our code and that when we play must have a reflection on what we have played. If I press the button, I receive a variable (a data) that is the button itself. And this, for example, I can change the color or its text by having been pressed. By changing that property of that variable that is the button, the interface has to react immediately and change that color or text. We are changing your “state”.

Early dark energy will solve the problem of the Hubble constant

Every possible value that I can give to an element in the interface is a state. If I have a field that can be active or not, I have a property of type Bool that will be true or false (only has those two possible values). True is active, false is not. Two states. But I also have another property of type Bool that is whether the field is hidden or not. I already have four possible states to manage from the interface:

- Hidden yes, active yes

- Hidden not, active yes

- Hidden yes, active not

- Hidden not, active not

Any element of the interface has hundreds more possible states for each element: color, text, edge color, shadow, it is played or not … very many. The complexity is enormous and greater complexity, more it costs a system to manage an interface full of elements.

Basically because it has to be “attentive” to all these possible changes of state and combinations to react to them. Something inefficient and very prone to errors.

Python 3.8 unifies configuration of initialization

The answer to make the interfaces more efficient came in the 90s when they began to use layout languages such as HTML, where we have a perfect example of a declarative interface. An interface that is defined as it is and does not change until the user interacts and changes to another page. But the HTML page itself, while we see it, never changes. It is immutable.

SwiftUI, declarative interfaces

Obviously, using a declarative interface as HTML, completely immutable, is impractical. So the developers went to work to find ways to work with the state, but any possible change in this was also declared in advance. In this way, if a state changes by the interaction of a user, the interface will know what it has to do but we will not change it with any code. We already told you what had to happen when we declared it.

Let’s see it even clearer: if the interface does not know what can happen to it, it has to be constantly aware of millions of combinations of possible changes. They are a lot of observers who are waiting for events: to change a color, to move a field, to change a font, to move an image … all the possible changes that an interface can have (all its possible changes) of state) are in permanent listening and the interface has to be prepared to represent them.

Tim Cook denies that Apple is a monopoly against the possible legal offensive of the United States

But if I make a declarative interface, I am defining (before it is painted) what states it has to observe and what it has to do when it happens. You just have to be aware of these because everything else will be immutable. And you can even process all the combinations in advance. So the process cost of managing that user interface is infinitely less. Because we are already declaring when building the interface what can be done and what can not: all its rules. So you just have to be aware of them, follow them and know before executing them how many possible combinations that interface will have. Nothing else.

Nowadays many libraries like Google’s Flutter or Facebook’s React, already use declarative interfaces, and now Apple has gotten into the car of this development trend , thanks to its Swift language with SwiftUI.

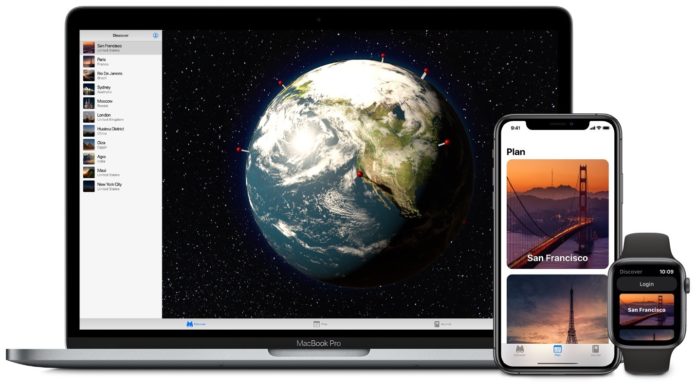

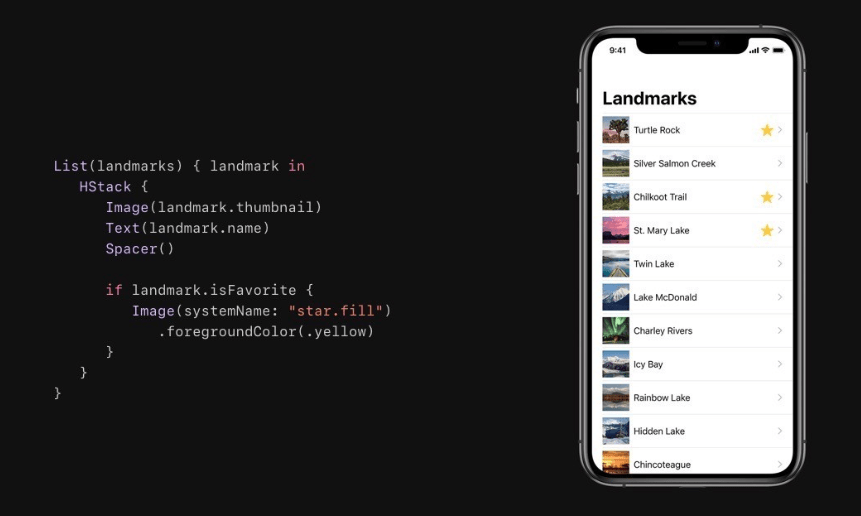

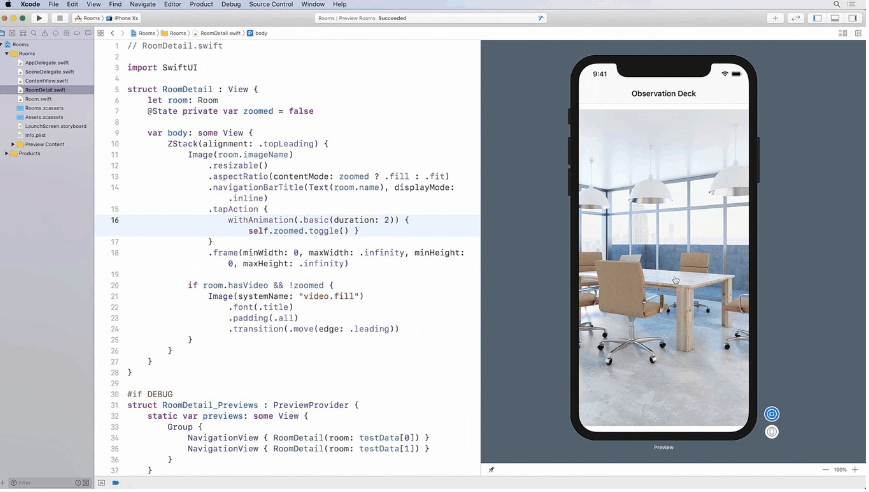

Thanks to SwiftUI, in addition, we will see the correspondence in real time between our code declaring the interface and its result. Using its different components, this interface will be built and drawn automatically for iPhone, iPad, macOS, watchOS and tvOS. Goodbye to the dreaded constraints, rules that had to be given so that our interface was able to draw on any device whatever its size. Now everything is automatically controlled by the system as a browser with HTML would do.

Machine learning predicted crystal growth twice as good as humans

Of course, we have enough properties to use, that allow us to draw what we want without problems, animations included, dynamic data sources associated with the interface, events … everything necessary.

A small example of structure

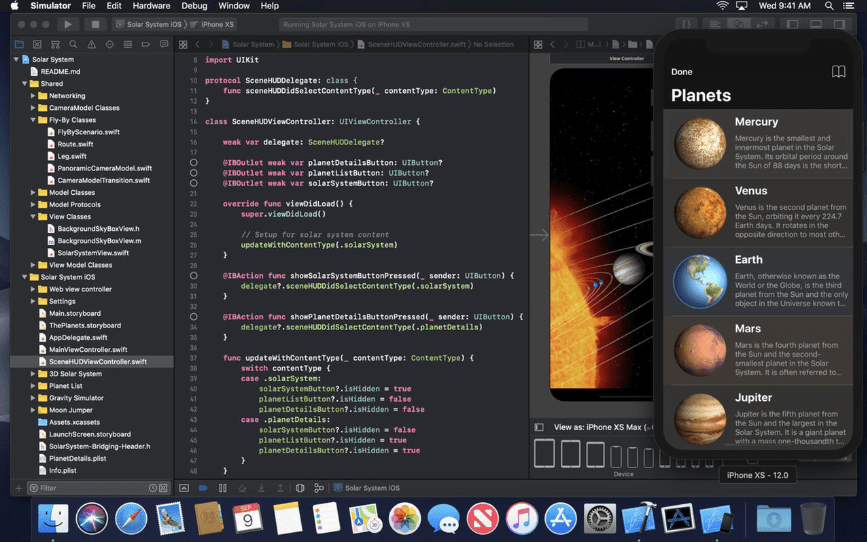

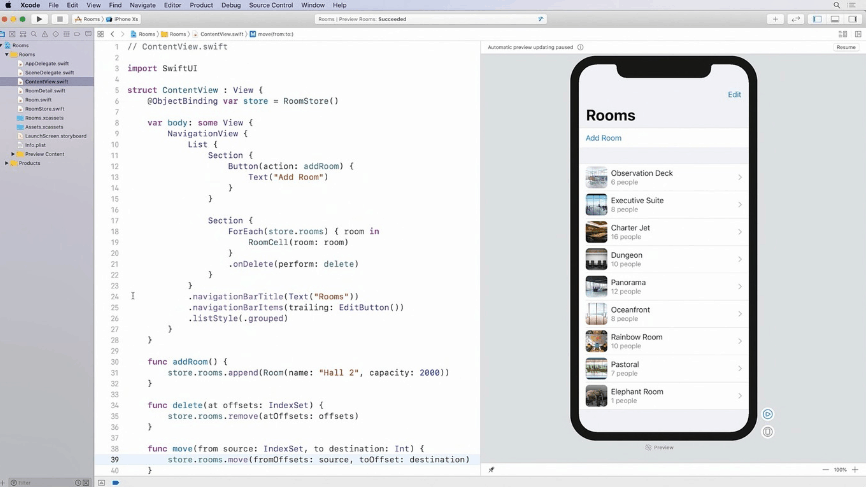

When we create a new project in Xcode 11, the first thing we see is a new checkthat tells us if we want to create a project with SwiftUI and this creates a project with a new delegate of scenes (to be used, among other things, for the new function of multiple copies of the same app open at the same time), but we will not have as usual a start Storyboard where we usually start to create our interface.

The sloth robot taught to climb a web of ropes

In its place there is a file called ContentView that from the scene is used to generate the start screen with code . The operation, without going into much technical detail, is based on a struct Swift (a structure) to which I say it is of type View. This forces to include a variable Body that will return the different constructors: the body of the screen.

struct ContentView : View {

var body: some View {

Text("Hello World")

}

}

What is inside body is a constructor that creates a text and puts it right in the center of the screen. It’s that simple If I want the text to be bold, I put a period after the parenthesis and call the function bold().

Text("Hello World").bold()

If I want to put more than one element, I have to use groupings, like a stacked view. With Vstack I create one and put inside what I want.

struct ContentView : View {

var body: some View {

VStack {

Text("Hello World").bold()

Image(systemName: "book")

}

}

}

In this way I put a vertical stacked view with the text above and an image of the new San Francisco Symbols character set, which represents a book. Now I put a button.

struct ContentView : View {

var body: some View {

VStack {

Text("Hello World").bold()

Image(systemName: "book")

Button(action: {

print("Toqué")

}, label: {

Text("Soy un botón")

})

}

}

}

Here I tell you that I want a button and I pass two parameters to it as blocks of code (or closures ): action what will be done when the button is pressed and label what is displayed on the button, a text. I could have sent an image and a button of an image had been made.

This is the simplest and simplest, obviously. Just a small glimpse of all the possibilities there are when building interfaces . If we use macOS Catalina, we can see the canvas next to the code and add elements by dragging and dropping in the interface, seeing how it looks and any changes in that constructor will modify the code in real time. As with any change in the code, the interface changes instantly.

macOS Catalina will use zsh instead of bash as the default shell

A first step

This technology is an unprecedented step forward, which now works as a native library in Swift that takes full advantage of the language and its characteristics , which are linked to another library of reactive programming (for asynchronous events) called Combine of which we will talk more go ahead if you are interested.

But beware, you have to be realistic. Creating an interface construction library from 0 is an epic task, and Apple has just started and lets us start using and learning (because we have to learn almost from 0) a new way of making interfaces. But right now it works as a layer placed on top of the previous UIKit, it is not independent at the infrastructure level. Will it always be like this? No, Apple will be independent of the engine. The same Swift language followed that process: in the first version, Swift was a different way of writing Objective-C, which translated into many elements and data types. And now it is completely independent in its architecture.

Mozilla brings Firefox password management as a browser addon

We are sure that what is now a layer of the old UIKit library, but that allows a different and more practical way of doing apps, will gradually get its own independent architecture, until it is completely UIKit and create a new library that we do not forget is compatible with all Apple systems.

As happened with Swift when it was launched in 2014, we are looking into the future and we can start working with it. And we return to the usual: Apple has not invented anything, all this was already invented. But they create their own version with a clear goal: to be the ones that do it best. For now, it seems that they are on the right track.

Tesla Pickup: this is all we know about Elon Musk’s next electric car