Voices, Faces, Sensations: New Equation Reveals How Real Neurons Differentiate Our World

New findings “can also be used to create next-generation artificial intelligences that learn as real neural networks do.”

A joint research venture involving the RIKEN Center for Brain Science in Japan, the University of Tokyo, and University College London has unveiled the intricate nature of neuron self-organization during learning processes.

They found that as neurons “learn,” their organization aligns with the free energy principle, a mathematical theory. This principle accurately discerns the inherent organization mechanisms of real neural networks as they segregate varying input data.

It also sheds light on how fluctuations in neural activity can impede this organizing process. These discoveries can potentially revolutionize the development of AI systems mimicking animal learning and can elucidate instances where learning is compromised.

The study was published today in Nature Communications.

The brain’s ability to differentiate between voices, faces, or odors hinges on neural networks’ capability to systematically arrange themselves, ensuring they can differentiate diverse input data streams.

This self-organization fundamentally alters neuron interconnections, laying the foundation for brain-based learning.

Takuya Isomura of RIKEN CBS and colleagues theorized that this neural rearrangement complies with the free energy principle’s mathematical parameters.

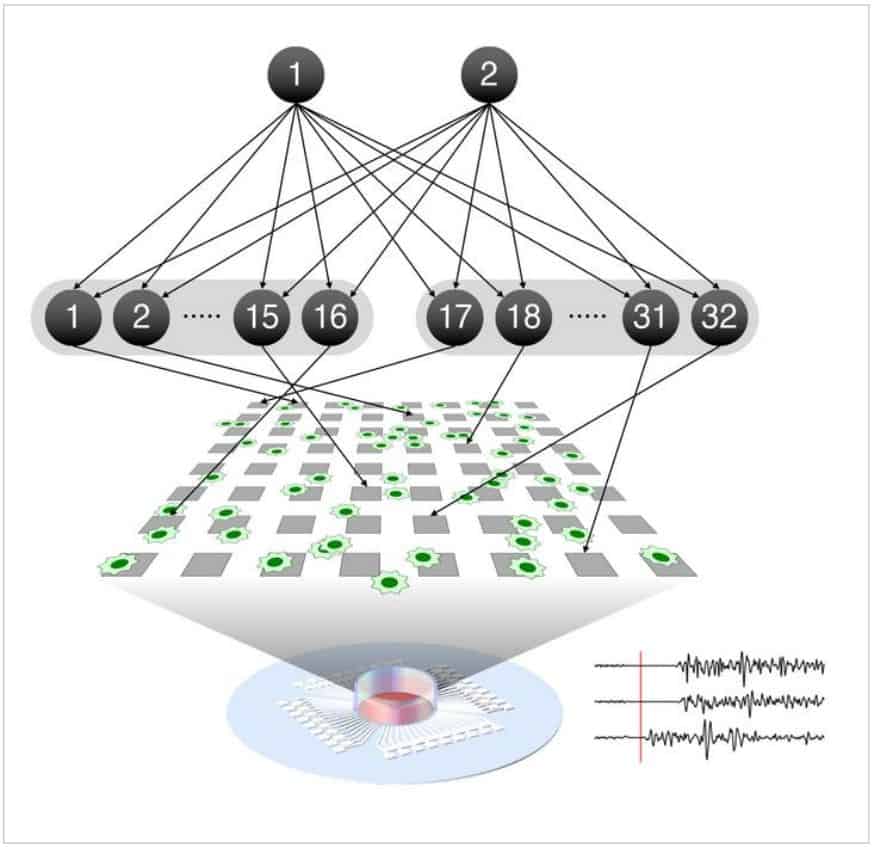

In their innovative research, they assessed this theory on neurons procured from rat embryo brains, cultured on electrode-embedded plates.

Learning, in essence, is the outcome of neural reorganization. Post-learning, specific neurons respond to particular stimuli. The team emulated this in the lab, applying specific patterns via the electrode grid to simulate distinct hidden data sources.

After consistent training, the neurons developed selectivity in their responses. Introducing drugs altering neuronal activity demonstrated that these cultured neurons behaved akin to operational brain neurons.

The free energy principle proposes that this self-arrangement adopts a course minimizing system energy. Using authentic neural information, the researchers reverse-engineered a model grounded on this principle, forecasting neural responses and connection strength during the learning period.

The model’s precision indicates that the initial neuron state can predict subsequent network alterations during learning.

Isomura shared, “Our results suggest that the free-energy principle is the self-organizing principle of biological neural networks. It predicted how learning occurred upon receiving particular sensory inputs and how it was disrupted by alterations in network excitability induced by drugs.”

Isomura further speculated on the potential long-term applications, stating, “Our technique will allow modelling the circuit mechanisms of psychiatric disorders and the effects of drugs such as anxiolytics and psychedelics. Generic mechanisms for acquiring the predictive models can also be used to create next-generation artificial intelligences that learn as real neural networks do.”

Source: 10.1038/s41467-023-40141-z

Image Credit: Shutterstock