American developers have created an algorithm capable of predicting the movement of human hands in his speech. Receiving only audio recording of speech, he creates an animated model of the human body, and then generates a realistic video based on it. A development article will be presented at the CPVR 2019 conference.

The main way to convey information to others around people is speech. However, besides her, we also actively use gestures in our conversation, reinforcing the spoken words and giving them an emotional touch. By the way, according to the most likely hypothesis of the development of the human language, initially the ancestors of man conversely communicated mainly with gestures, but the active use of hands in everyday life led to the development of sound communication and made it basic. One way or another, the process of a person uttering words in a conversation is closely related to the movements of his hands.

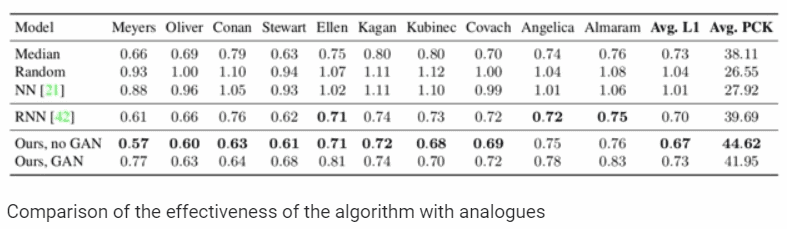

Researchers led by Jitendra Malik from the University of California at Berkeley used this connection to predict a person’s gestures in a conversation based on the voice component of his speech. The work of the algorithm can be divided into two stages: first, it predicts the movement of hands through the audio recording of speech, and then visualizes the predicted gestures using the algorithm presented in 2018 by a related group of researchers. Then the developers taught the neural network to transfer the movement of people between videos, using an intermediate stage with the recognition of a person’s posture.

At the first stage, the UNet algorithm on the basis of the convolutional neural network accepts a two-dimensional spectrogram of audio recording and turns it into a one-dimensional intermediate signal. This signal is then transformed into a sequence of poses represented as a skeletal model with 49 key points reflecting parts of the arms, shoulders and neck. After that, the sequence of poses is transmitted to the visualization algorithm, which turns it into a video.

In order to teach the algorithm to convert speech to movement, the researchers collected datasets consisting of records with a total length of 144 hours. There were TV presenters, lecturers and religious preachers on the recordings — such a choice was due to the fact that it was easy for them to find long recordings of speech with gesticulations. Using the OpenPose algorithm, the researchers compared each frame from the dataset with a skeleton model. Obtaining during training the recording of speech and frames with the finished model, the algorithm has learned to create realistic videos. It should be noted that the approach chosen by the authors implies that for correct work it is necessary to train a separate neural network model for a specific person.

On the video demonstrated by the researchers it can be seen that some movements do not fully correspond to the real movements of a person on the original recording. For example, often the algorithm selects the correct movement, but uses the wrong hand. However, this is a consequence of a fundamental lack of approach rather than its incorrect implementation. The fact is that gestures during speech are not invariant – different gestures can correspond to the same phrase, spoken by the same person.