A team of engineers from Google have learned to create a realistic model of a moving person and embed it in a virtual space, changing the lighting of the model accordingly. They created a stand with several dozen cameras and hundreds of controlled lighting sources, inside which is a person. The system quickly changes lighting and captures a person from different angles, and then combines this data and creates a model that describes with high accuracy both the shape of the body and clothes and their optical properties. The development will be presented at the SIGGRAPH Asia 2019 conference.

Technology of motion capture and the creation of virtual avatars has been used for several years in various fields. Some of them (for example, those used when shooting films) are based on the capture of precisely the movements of the face and other parts of the body, the recordings of which are then used to animate another character. When it is important to preserve a person’s appearance, systems from multiple cameras are used.

Some developers in this area have managed to achieve fairly high-quality results. For example, Intel uses on some sports stadiums system, allows you to play replays from any angle. However, such systems cannot collect data on the optical properties of objects, and the model obtained with their help cannot be realistically transferred to an environment with other lighting.

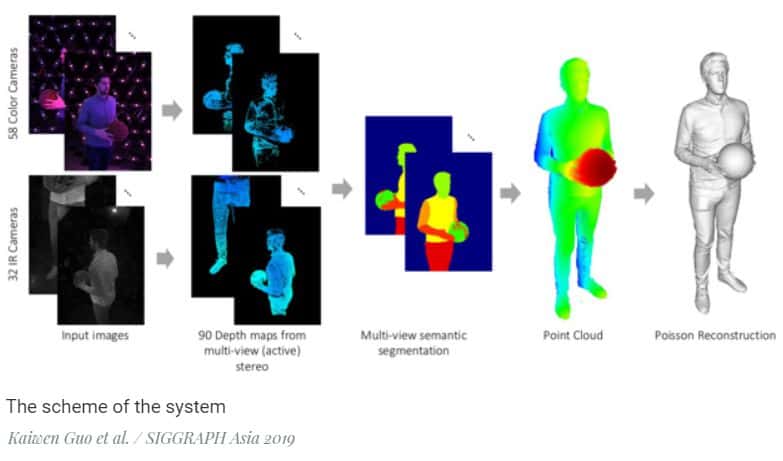

A team of Google engineers, led by Paul Debevec and Shahram Izadi, created a film stand and software that allows you to create a model that really reflects both the shape and optical properties of a person in motion, as well as transfer this model to another environment and adjust the lighting to fit it.

The stand has an almost spherical structure with an opening through which a person enters. There are 331 lighting units on the racks that make up the sphere, each consisting of individual LEDs of a certain colour, 42 colour cameras, and 16 capture units depths, and each of which consisting of one colour and two infrared cameras, and an infrared laser projector. Since the system creates a huge amount of data, its processing takes place on cloud servers.

During the operation, the laser projects an infrared pattern consisting of thin lines on the human. This allows the system to restore human shape with high accuracy, comparing infrared data with the original pattern. LEDs project light on to a person with the necessary spatial distribution, and they quickly (60 times per second) alternate two-colour gradients, inverse to each other. This allows you to create not only a map of the distribution of colour on the body and clothes but also a map of reflection, which allows you to programmatically change the lighting of the person, realistically embedding a person into a new environment.

The authors compared their development with previous similar systems. The new system allows you to get a higher-resolution model as well as fewer artefacts. In addition, it works much better with fast-moving objects in the frame, for example, a tossed ball. The developers have also created a demonstration app for the smartphone that works in augmented reality mode and realistically embeds the human model into the world in front of a smartphone. It works on the basis of a previous development, which determines the characteristics of lighting and reflection of objects according to camera data.